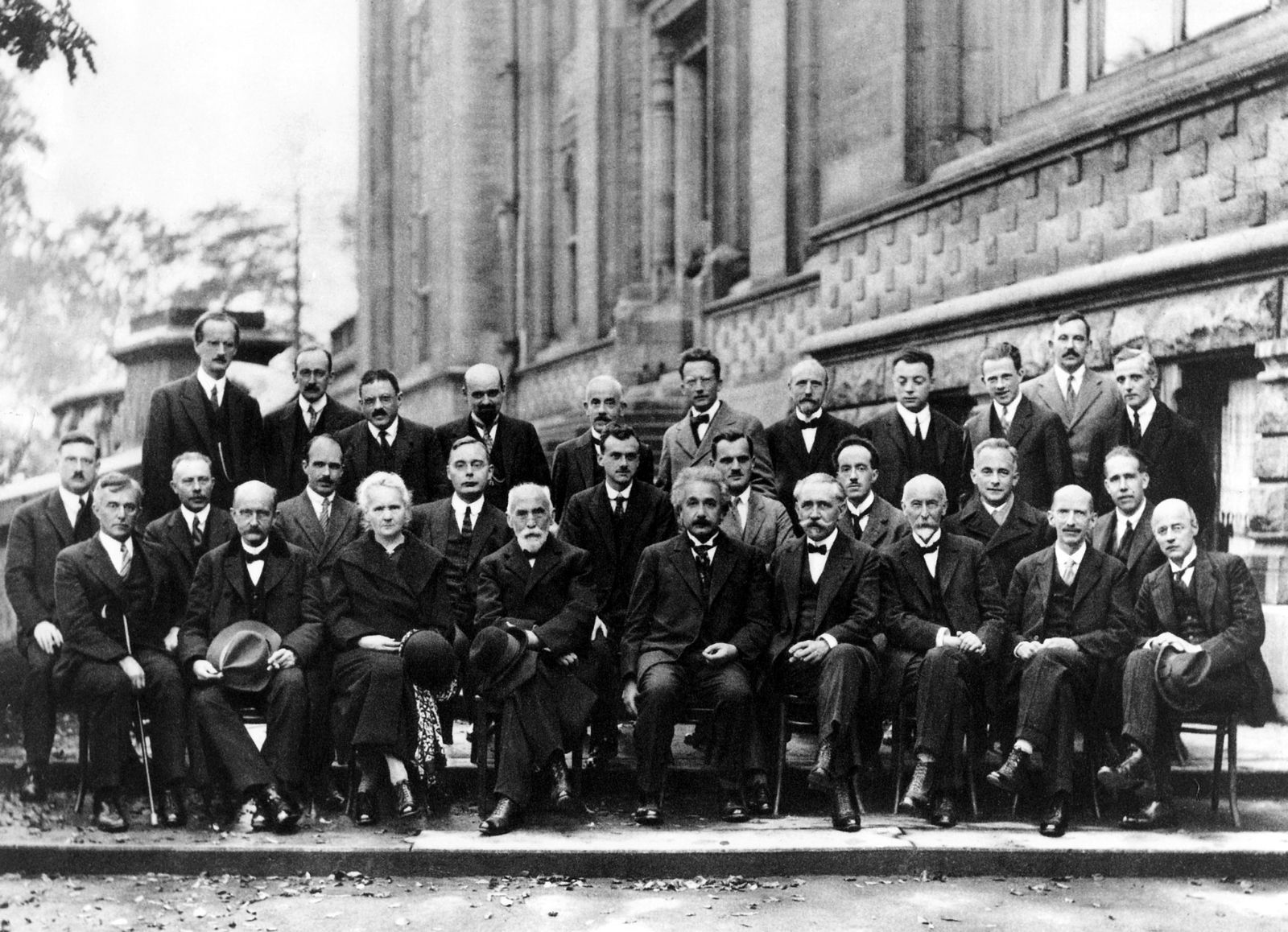

OCAML members, pre-pandemic picture

Objectively Casual Association for Machine Learning

Adam

Alicja

Frederic Grabowski, frederic.grabowski@ippt.pan.pl, IPPT PAN

Kuba Perlin

Paweł

Paweł, submitted on 2021-03-20. Will be discussed on 2021-06-07.

comic from xkcd.com

Everybody knows that margarine consumption is a cause of divorces (at least in Maine) and that a PhD in sociology can help you successfully launch a space rocket. Or… maybe not? More seriously, how do we calculate vaccine efficacy? Or how do we build robust models for healthcare, which generalize across different hospitals? (See Building Reliable ML: Should you trust NNs?). Although many popular ML algorithms work on the level of statistical relations, the causal aspects are critical for model generasability, robustness, and applications to domains in which decisions are important.

Reading:

Bonus:

Kuba, submitted on 2020-10-31.

VQA: given an image and an English question about the image, produce a truthful text answer. VQA is perhaps the most studied task where two strikingly different modalities are used. I selected some recent papers from Deepmind on the topic.

Reading:

Paweł, submitted on 2020-11-01.

Talent wins games, but teamwork and intelligence win championships.

– Michael Jordan

Everybody knows that RL is excellent in one-vs-one games such as chess, go, or StarCraft. But nowadays teamwork is becoming more and more important – think about a fleet of autonomous taxicabs or an ensemble of delivery drones. How does RL work in the scenario of many autonomous agents? And, more importantly, does multi-agent RL work in the presence of imposters which try to harm the team? (See Avalon or Among Us).

Reading:

Paweł, submitted on 2020-12-01 (rebranded on 2021-02-14).

We build machine learning systems by taking a big neural network and feeding it millions of examples. However, for every task there are more and less sensible architectures. For example, CNNs employ an inductive bias: “To recognize an object, find ears, nose, wheels. To recognize ears, nose, wheels, find appropriate edges. To recognize edges, ...”.

Very often we – humans – already have a pretty good intuition how the solution should look like and what subproblems need to be solved. But how do we guide the NN to do that?

comic from xkcd.com

Reading:

Bonus:

Paweł, submitted on 2021-02-03.

Quoting the wisest, “Universe is non-Euclidean, why should data be?”. Let’s explore how the ideas from geometry and topology can help neural networks work better.

In particular we will learn that all classification problems can always be solved (at least from the topologists’ perspective) and how autoencoders are linked to the Manifold Hypothesis. We will also discuss whether the task of neural network validation can be accomplished... without a validation data set!

Reading:

Bonus:

Paweł, submitted on 2021-02-03.

We all know and love ResNets, which come in many flavours: ResNet50, ResNet100, ResNet1202… But what if we wanted to train an infinitely deep ResNet? We arrive at Neural ODEs!

Reading:

Bonus:

Paweł, Adam, Frederic G., submitted on 2021-05-24.

Time to take a look at GNNs in real life!

Molecules:

Other applications:

General resources:

Frameworks:

Frederic G., submitted on 2021-05-16. Discussed on 2021-05-24.

Abstract: We will learn what triplet loss is and revisit its usefulness for training classifiers. Next we’ll dive deeper into the slightly related topic of information retrieval, by using differentiable sorting/top-k classification.

Reading:

Bonus:

Alicja, submitted on 2021-04-25. Discussed on 2021-05-10.

Explaining Machine Learning predictions: is it even possible?

Let's try to find out.

Reading:

Bonus:

Kuba, discussed on 2020-10-31.

Knowledge distillation refers to training small networks given pre-trained large networks. The hope is to obtain a small network that shall be better at the task than if it was trained directly. Small networks are faster to evaluate and require less memory. An example use case for small networks is mobile robotics, where the robots cannot carry around a GPU.

Reading:

Bonus, related to adversarial examples:

Paweł, discussed on 2021-02-17.

When we fit a line to the data, we minimize the squared distance. But why does this yield a sensible estimate for the slope? And what is the “uncertainty” of that slope? Bayesian (Deep) Learning answers this question by interpreting the fitting process as a Bayesian update from prior to posterior knowledge and tries to do that for a deep learning model proposing uncertainty on learned weights.

But how is that related to the ensemble models?!

Reading:

Bonus:

Paweł and Adam, discussed on 2020-12-01.

[Achilles] Mr T., why do NNs work?

[Turtle] Because they are universal approximators, aren’t they? Any reasonable function can be approximated by a deep enough net.

[Achilles] But this is boooring… You can accomplish the same result with a look-up table! I wonder why they learn…

[Turtle] Oh, you mean why deep learning works? I don’t know, but let me show you NTK…

Reading:

Bonus:

Paweł, discussed on 2021-02-10.

How does one choose the best set of hyperparameters for a NN, decide which one-armed bandit to play or find the catchiest new topic for OCAML? And what do these things have in common with fitting a line to noisy data? (Or a curve. Or an infinite family of curves). If the optimized function is noisy and our observations are limited, we should consider Bayesian Optimization powered by powerful regression models called Gaussian Processes (GPs). During this session we will read a review paper [1], which summarizes BayOpt and GPs in less than thirty pages and then see what happens when deep learning researchers enter the challenge [2,3].

Reading:

Bonus:

Kuba, discussed on 2021-01-22.

Humans arguably factorise situations / world states into familiar building blocks -- when you see a falling apple for the first time in your life, but you’ve seen apples before and you’ve also seen pears fall before, you know what’s going to happen. Disentanglement, or factorisation, in deep learning can make for cool sets of generated 2D images with faces gradually changing orientation, or colour of hair… But how can it be achieved and formalised? Let’s try to find out.

Reading:

Bonus:

Adam, Frederic, Kuba, discussed on 2020-10-31.

Transformers use self-attention to process sequences differently from recurrent architectures. The length of the ‘information path’ through the network, between any two sequence elements, is constant, as opposed to O(n) for RNNs. Transformer-based architectures have gotten very popular lately, with applications beyond NLP.

Reading:

Bonus:

Alicja, discussed on 2020-11-01.

Universe is non-Euclidean, why should data be? Graph Neural Networks can save the day!

Reading:

Bonus:

Kuba, discussed on 2020-10-31.

Normalising Flows are invertible operators used to build (easily) learnable, complicated probability distributions, for use in variational inference.

I especially encourage you to vote on this topic if you’re also not very familiar with variational inference / bayesian ML -- we can learn together (and hopefully at least one person will know a bit more to help out…)

Reading:

Bonus:

Paweł, discussed on 2020-10-30.

Adversarial examples try to trick neural nets, similarly as magicians and optical illusions trick human brains. It’s useful to know how to defend your neural network from these kinds of attacks… or use them during the training phase to your advantage!

Reading:

Bonus:

Paweł, discussed on 2020-11-01.

Drug design is hard – molecules are tricky to describe and it’s not obvious at all how to predict the properties of a proposed molecule just from its chemical structure. Can neural nets, aka universal approximators, solve this problem? Let’s figure it out!

Reading:

Paweł, discussed on 2021-01-11.

Machine learning is great – we are entering the age of self-driving cars, GPTs writing newspapers, and fast medical diagnosis. But how much should we trust the NNs when there is no human around? How should they be evaluated so they are reliable? We will explore how one can discover spurious correlations leading to non-generalizability of trained models and how to properly evaluate a novel architecture.

Reading:

Bonus:

Kuba, discussed on 2021-01-22.

This three-act story first introduces us to a neuro-symbolic architecture. Then, a video-based reasoning dataset with 4 fundamental types of tasks is presented, where symbolic models may be expected to excel. In a grand finale, DeEpmINd trains a TrAnSfoRMer and outperforms all neuro-symbolic approaches :P

Reading:

Alicja, discussed on 2021-04-10.

Learning to learn - how cool is that?!

Reading:

Bonus: